Citation

L'auteur

Marc Hobballah

(marc.hobballah@totalenergies.com) - (Pas d'affiliation)

Copyright

Déclaration d'intérêts

Financements

Aperçu

Contenu

It’s hard to go anywhere without hearing about the explosive growth of AI being deployed into production systems. Tech giants and startups alike are harnessing AI’s power, and if you’re not onboard, you’re falling behind! News outlets are buzzing with stories like “AI outperforms doctors in diagnosing diseases” or “Self-driving cars are hitting the streets” — exhilarating! Business everywhere, including many of our business at TotalEnergies, now recognize, more than ever, that these AI systems are revolutionary and need to be implemented to enhance their productivity. As a data scientist for 10 years now, it has never been easier to convince a business unit how AI can transform their day-to-day job. There’s just one problem; AI-systems are not (yet) your plug and play keyboard that you can integrate in a business unit. In this article, I’ll share insights and lessons learned from leading a three-year pricing project, focusing on strategies for driving successful user adoption, and that’s from a data scientist perspective.

You see, integrating AI into production systems is not an entirely new challenge. We’ve been building complex software using classical engineering frameworks since the early days of computing, think of any video game or any control system for aviation.

In fact, almost every large-scale application relies on established methodologies. All enterprise-grade software solutions adhere to these principles. The more sophisticated the system, the greater its complexity and the need for meticulous engineering. That’s the intuitively obvious progression in software development. However, from our observation in TotalEnergies Digital Factory, when AI enters the equation, human factors become much more invested in the solution. Which provide a great opportunity, but also comes with many challenges.

At the TotalEnergies Digital Factory, we deployed many different AI-models to multiple TotalEnergies branch. And I had the opportunity to work as the lead data scientist on a large-scale pricing project that brought together multiple AI models, each addressing different aspects of a complex business pricing challenge — from predictive modeling to elasticity and optimization. This project has become one of the gold standards in demonstrating how complex AI models can be effectively deployed and managed within TotalEnergies environment.

What is AI deployment anyway ?

Nowadays, when someone says, ‘AI deployment in production’, mostly what they really mean is that the AI code should be respecting software engineering principles. That is, following software design patterns, versioning not only code but AI models also, implementing thorough unit and integration testing, and establishing robust CI/CD pipelines and deployment architectures tailored for AI and specific to the context of AI, monitoring is crucial. Resources on these subjects are increasingly abundant, especially with the managed services offered by cloud providers. However, while these aspects are incredibly important (and let’s be honest, who doesn’t love seeing those GitHub Actions workflow boxes turning green? ), they are far from being the most challenging problems we faced when deploying AI products.

So what the heck you are talking about ?

Deploying AI solutions within a business unit is not just a technological upgrade — it may fundamentally alter workflows, decision-making processes, and even organizational culture.

While change management isn’t solely the responsibility of the data scientist — and if you’re handling it alone, that’s a significant red flag (at TotalEnergies Digital Factory, we have dedicated teams for this) — I want to share what it means to participate in the change process from a data scientist’s perspective.

Collaboration is the pillar of change

Successfully deploying AI solutions in industry isn’t just about cutting-edge algorithms or sophisticated models; it’s fundamentally about the collaboration of people across various disciplines. My aha moment was when I’ve realized that, as a data scientist, end-users are part of my squad. Diverse teams are better equipped to anticipate the operational shifts that new AI applications may require.

Remember, AI in Industry is about helping business in their decision-making process. You are trying to transition the decision-making process from an experience-based to data-driven one. By augmenting end-users’ own judgment and intuition with algorithms’ recommendations, better answers could be reached. But for this approach to work, people at all levels must trust the algorithms’ suggestions and feel empowered to make decisions.

A helpful reminder: it’s the business that is deploying AI, you are deploying code on servers. What this means is that they are the ones regularly interacting with AI recommendations and incorporating AI into their workflow and decision-making processes. Therefore, they need to understand what’s going on, and you must acknowledge that. If not, the adoption will be difficult. Here are some of my recommendations to achieve smoother adoption.

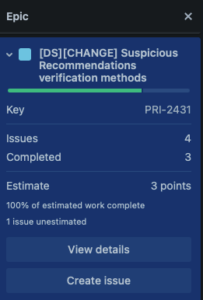

Materialize change as tickets

In our project, at some point we did recognize that change needed to be treated as an epic, just like any other development epic in the project. Change tickets accounted for about 10% to 20% of the complexity points in each sprint. These tasks primarily consisted of workshops, presentations, active participation in daily processes, and live explanations of results. Also, it’s crucial not to overlook that the change management itself demands development effort.

Even if you’re the most exceptional data scientist out there (I know you are), the business needs to see tangible outputs on their screens to trust the algorithms. This includes indicators, explanations of why the algorithms made specific recommendations, and, depending on the use case, perhaps even the ability to adjust parameters. All of this constitutes additional development work. The earlier this development is anticipated, the fewer surprises will emerge, leading to a smoother AI deployment process.

Use simpler model when possible !

In industry and high-stakes operations, utilizing complex models can actually make adoption much more challenging. Let’s be honest, even you will have hard time explaining some recommendations of that ‘very very’ deep neural network you developed. It’s not that complex models should never be used, but depending on the use case, it’s crucial to consider whether the additional complexity — and perhaps only a marginal 3% increase in accuracy — is truly valuable.

Trust is significantly easier to build with white-box models. From experience, industry business often isn’t concerned with performance metrics like training and testing accuracy. When a recommendation turns out to be wrong, telling them “it’s usually correct 95% of the time” isn’t likely to satisfy them. In extreme cases, it might even cast doubt on all algorithmic results. Providing clear explanations for why the model made a specific recommendation can positively influence end-users to be more vigilant when similar situations occur.

An effective strategy we’ve employed is to use explainable models as the foundation and then apply black-box models to fit the residuals. This approach allows decision-making to be based on the white-box model, while the complex model offers additional insights that can be valuable in certain contexts.

Introduce scientific and methodical verifications

Beyond usual monitoring of AI results in production, businesses need proper tools to ensure that models’ outputs remain accurate and reliable. In our project, each end-user faced about 2,000 recommendations to validate daily, making it impractical to review each one beyond the most obvious cases. To address this challenge, we provided users with a daily list of about 50 recommendations. Half of these were the ones where the model was least certain about the results, and the other half were randomly selected. This approach allowed end-users to delve deeper into these specific recommendations, either validating them or reporting any issues back to us.

Crucially, this methodical verification process helps maintain the integrity of the AI system and build trust. By focusing on uncertain and random recommendations, we can identify potential problems early on.

In short, AI deployment is challenging; by embracing change as part of your work, including it on your Scrum board, making user adoption a key metric in model selection, and implementing systematic verification methods, it may becomes much smoother and less of a hassle.

il ne peut pas avoir d'altmétriques.)